Embeddings

This docs page will show you how to utilise our Deep Biometrics Research Demo.

Introduction to Embeddings

In natural language processing, we need to remember that Language Models appear to understand human speech, but don't actually understand it in terms of words and letters as humans do. Instead, text information is understood by LLM's as vectors.

Vectors?

Vectors are a quantity used heavily in Physics to represent information that has both magnitude and direction. In the physical world, we can represent somebody's velocity as a vector with two components, their speed along the East-West direction and their speed along the North-South direction on a compass, for example.

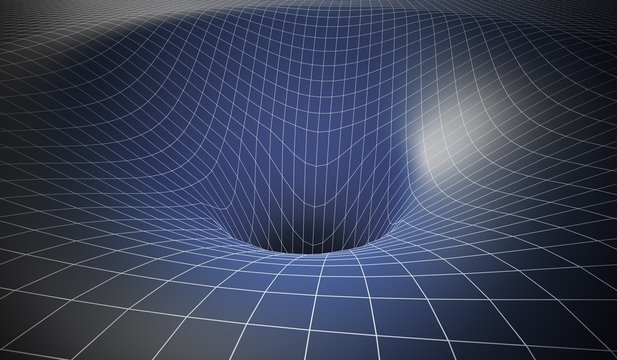

In machine learning, we use high-dimensional vectors. To a human, this is meaningless because we can perceive at most 3 dimensions. In Relativity, we can count 4 if including time, and string theorists can envision 26 independent dimensions of physical space. AI, however, can think in hundreds of dimensions- and represents all information it interprets as being a vector in a high-dimensional coordinate system. Whereas we humans think in 3 coordinates, AI can think in hundreds.

Physicists studying relativity think in 4 dimensions, and string theorists think in terms of 26 dimensions. Machine Learning models go far higher than this!

Embeddings

Any text data fed into an LLM goes through a series of mathematical operations called "Linear Transformations", which continuously update the size and values of the original vector representing our text. From the second-final layer of the model, we can extract a vector representing the original input text. Usually this vector may have hundreds or over a thousand independent components, each representing a different feature of the text. Each component of this vector measures a different feature of the piece of text, and is known only to the LLM itself.

Even though we don't know what these features learned are, we notice that similar pieces of text have similar components in this output vector, and this allows us to store the vectors more conveniently. It also makes it possible to perform a search of the most relevant vectors to that of a question we'd like to ask, and enables high-quality information retrieval.

These output vectors from the LLM are referred to as "embeddings", and are to modern Generative AI what petroleum is to a car's engine. Modern LLM applications would not be possible without use of vector embeddings.

Vector Search

Embeddings are often obtained by running a kind of binary search algorithm over a high-dimensional vector space. This is often done by K-Nearest Neighbours, or by using Approximate Nearest Neighbours search. Embeddings are clustered together based on their geometric distance (euclidean or cosine distance, usually).

Each component of an embedding vector represents some distinct feature learned by a neural network, and we can imagine that these mathematically each correspond to the measurement of a piece of textual information along some orthogonal basis direction, in a way very similar to PCA. This means that if two embeddings have a very similar set of components, i.e. their geometric distance is small, then they are thought to hold very similar meanings.

Curse of Dimensionality

Even the most robust vector search algorithms suffer from using large vectors to represent your embeddings. This is due to higher-dimensional spaces introducing overwhelming complexity not present in much lower-dimensional vector spaces.

When performing a vector search of your Vector Store to retrieve the most useful documents, CoD may return results that are not necessarily the most relevant.

A handful of solutions exist:

Use lower-dimensional embeddings- although some of the meaning of your embeddings is truncated by reducing their dimensionality, the results may lead to a much more relevant vector search result.

Use higher-quality embeddings- we will release our own embedding model to help you generate embeddings that are firmly grounded in vector search metrics. These are known as high-quality embeddings.

Rerank Your Documents- documents retrieved from a vector search can be reranked using a technique known as LLM-As-A-Judge. This requires a special LLM that is specifically designed to identify relevance between a query and a document, and would enable a developer to use much higher dimensional embeddings if they wish!

Embeddings API Coming Soon!

Setup Python Virtual Environment:

First check that you have Python3 installed on your machine. In your system terminal, write

python3 --version If not found,then we recommend visiting the Python website:

Write a requirements file, and set up a virtual environment in Python. Name this file requirements.txt and place it in the root directory of your project folder.

requests==2.28.2

gunicorn==20.1.0

Flask==2.2.3

Jinja2==3.1.2

Werkzeug==2.2.3

flask_restful==0.3.9

flask-cors==3.0.10python3 -m venv env

source env/bin/activate

pip install -r requirements.txtNow, write a file called app.py in the same folder as requirements.txt:

COMING SOON! :)Making API Calls

In order to make API calls to a front-end application, you can console log the status of your response object in order to determine the success of your request reaching our API. The following HTTP response codes are provided with some error-handling comments to assist you.

| Status | Description |

|---|---|

200/201 | Request was successful. |

500 | Internal server error- only upload strings to the endpoint. |

400/401 | Error in request header- if using Javascript, add "Content-Type": "application/json" to POST request header |

To test that you have everything set up correctly, we'll make a simple request to the Ping endpoint. Hitting this endpoint acts as a health check on the Docs API service; it won't affect your account in any way.

HTTP requests are the backbone of the internet. Without them, we wouldn't be able to communicate with web servers and load the pages we see in our browser.

This library is available on most Unix-like systems, and can be used to make HTTP requests to any HTTP server.