Deep Learning

The Math Behind the Madness

Deep Learning: Crash Course

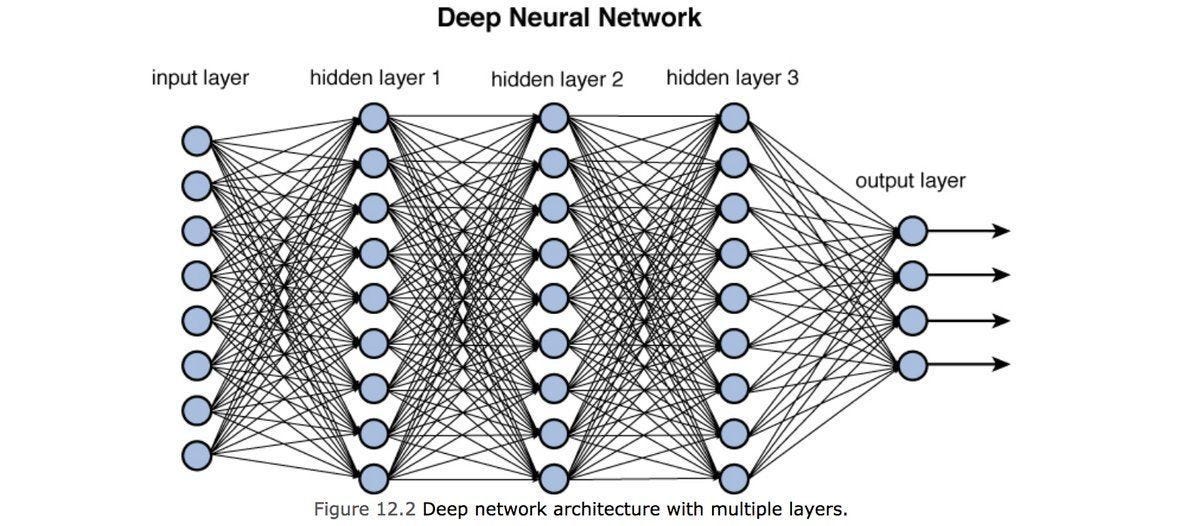

Deep learning refers to any machine learning algorithm implementing a model through stacks of "hidden layers", for purposes related to making predictions as a function of some inputs to this model. Deep learning, therefore, is almost always a Supervised Learning Technique, as it requires labelled training data.

Origins- Boltzmann Machines

Early on in the field, a popular technique for modelling the human brain was a Boltzmann Machine, which is, in simple terms, a fully connected graph characterized by some total energy, or Hamiltonian, H. The Hamiltonian is an energy-based quantity, which in quantum mechanics denotes the total kinetic and potential energy of a subatomic particle, and is named after Irish Physicist Rowan Hamilton.

A complete graph

For this reason, Boltzmann Machines were a type of energy-based model.

The Hamiltonian was characterized by the on/off (0 or 1) state of each vertex of the graph, and the related values of the graph's adjacency matrix. The Boltzmann Machine sought to predict the state of each vertex using a Boltzmann Distribution from thermal physics. It turns out that the likelihood of a given vertex being in the "on" or "1" state was given by the corresponding PDF, the Softmax Function.

Restricted Boltzmann Machines

Source: https://miro.medium.com/v2/resize:fit:1199/1*N8UXaiUKWurFLdmEhEHiWg.jpeg

Early on in the field, Anglo-Canadian computer scientist Geoffrey Hinton proposed the concept of Restricted Boltzmann Machines (RBF's). RBF's are characterized by replacing the traditional complete graph of a Boltzmann Machine with hidden layers, and assigning one layer as the input and one as the output layer.

As per the traditional Boltzman Machine, the RBF would predict outputs following a softmax PDF, for classification tasks. It was found, however, that this model could also perform regression on various tasks.

Gradient Descent

As the mathematics underlying this esoteric field of computer science became more fleshed out, more calculus-based methods were introduced to understand the behaviour of the neural network. The common algorithm used by all neural networks today is gradient descent.

Here's a primer on how it works.

Understanding Gradients

Source: wikipedia

In multivariable calculus, a gradient is a vector obtained at a point on the surface of a function which indicates the direction of fastest increase. You determine this naturally by calculating the partial derivatives of that function with respect to each coordinate. In deep learning, the goal of training a model is to determine its parameters in such a way that the error between a prediction and a ground-truth label is minimized. To do this, we simply compute the gradient of our error function over a batch of predictions with respect to the weights or parameters of the hidden layers themselves. This means we want dL/dW and dL/db for the weights and biases, respectively.

These gradients indicate the direction in which the loss is increasing fastest with respect to their respective parameters- so subtracting them from the starting W and b values should result in a decrease of the loss function. Gradient descent is simply a greedy (short-sighted) algorithm for searching a parameter space for the best sets of weights and biases required to minimize error on predictions, using techniques from calculus.

Backpropagation

It may seem unintuitive as to how the weights in each layer of the network are updated. This part of the algorithm is referred to as backpropagation.

Backpropagation is a fancy machine learning term for the chain rule from calculus. Each layer's gradient is computed by multiplying derivatives using the chain rule as follows:

dL/dW = dL/dy * dy/dW

Where y is a vector and W is a hidden layer generating that vector (prediction) by operating on some input.

Congratulations for reading this far- you should now be familiar at least with some of the jargon of data scientists!

In order to understand this material in-depth, it's recommended to take courses, or code out a neural network from scratch using a library like numpy, as an exercise for the reader.